43 soft labels machine learning

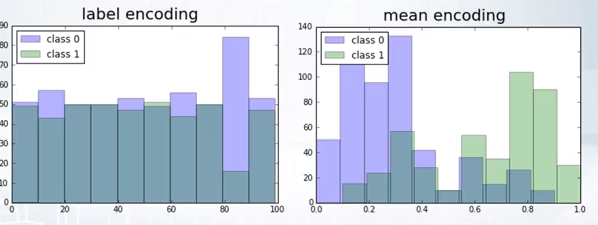

[D] Instance weighting with soft labels. : MachineLearning Suppose you are given training instances with soft labels. I.e., your training instances are of the form (x,y,p), where x ins the input, y is the class and p is the probability that x is of class y. Some classifiers allow you to specify an instance weight for each example in the training set. Pseudo Labelling - Analytics India Magazine There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards.

machine learning - Stack Overflow label: The output you get from your model after training it is called a label. Suppose you fed the above dataset to some algorithm and generates a model to predict gender as Male or Female, In the above model you pass features like age, height etc. So after computing, it will return the gender as Male or Female. That's called a Label Share

Soft labels machine learning

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability Learning Soft Labels via Meta Learning - Apple Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. An Introduction to Confident Learning: Finding and ... To solve your problem, just train a classifier (start out with logistic regression in sklearn if you aren't sure) to predict three possible labels, 0, 1, and -1. I recommend mapping the labels to 0, 1, 2. Then after training, when you predict, you can type classifier.predict_proba () and it will give you the probabilities for each class.

Soft labels machine learning. [2009.09496] Learning Soft Labels via Meta Learning - arXiv Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. Distilled One-Shot Federated Learning - DeepAI Figure 1: Distilled One-Shot Federated Learning. (1) The server initializes a model which is broadcast to all clients. (2) Each client distills their private dataset and (3) transmits synthetic data to the server. (4) The server fits its model on the distilled data and (5) distributes the final model to all clients. Knowledge distillation in deep learning and its applications Soft labels refers to the output of the teacher model. In case of classification tasks, the soft labels represent the probability distribution among the classes for an input sample. The second category, on the other hand, considers works that distill knowledge from other parts of the teacher model, optionally including the soft labels. Label Smoothing: An ingredient of higher model accuracy These are soft labels, instead of hard labels, that is 0 and 1. This will ultimately give you lower loss when there is an incorrect prediction, and subsequently, your model will penalize and learn incorrectly by a slightly lesser degree.

ARIMA for Classification with Soft Labels - Medium In this post, we introduced a technique to carry out classification tasks with soft labels and regression models. Firstly, we applied it with tabular data, and then we used it to model time-series with ARIMA. Generally, it is applicable in every context and every scenario, providing also probability scores. comparison - Stack Exchange A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels. pythonistaplanet.com › pros-and-cons-of-supervisedPros and Cons of Supervised Machine Learning - Pythonista Planet Another typical task of supervised machine learning is to predict a numerical target value from some given data and labels. I hope you’ve understood the advantages of supervised machine learning. Now, let us take a look at the disadvantages. There are plenty of cons. Some of them are given below. Cons of Supervised Machine Learning classification - Cross Validated In the case of 'soft' labels like you mention, the labels are no longer class identities themselves, but probabilities over two possible classes. Because of this, you can't use the standard expression for the log loss. But, the concept of cross entropy still applies. In fact, it seems even more natural in this case.

› support-vector-machineSupport Vector Machine - an overview | ScienceDirect Topics 6.4.4 Support vector machine. Support vector machines (SVMs) are supervised learning models that analyze data and recognize patterns, used for classification and regression analysis [27]. SVM works by constructing hyperplanes in a multidimensional space that separates cases of different class labels. python - scikit-learn classification on soft labels ... Cross-entropy loss function can handle soft labels in target naturally. It seems that all loss functions for linear classifiers in scikit-learn can only handle hard labels. So the question is probably: How to specify my own loss function for SGDClassifier, for example. Softmax Function Definition - DeepAI Mathematical definition of the softmax function. where all the zi values are the elements of the input vector and can take any real value. The term on the bottom of the formula is the normalization term which ensures that all the output values of the function will sum to 1, thus constituting a valid probability distribution. What is the difference between soft and hard labels? - reddit Soft Label = probability encoded e.g. [0.1, 0.3, 0.5, 0.2] Soft labels have the potential to tell a model more about the meaning of each sample. 6 More posts from the learnmachinelearning community 734 Posted by 5 days ago 2 Project Started learning ML 2 years, now using GPT-3 to automate CV personalisation for job applications!

How to Label Data for Machine Learning: Process and Tools ... Whether human or machine, there should be a certain rate of agreement to ensure the high label quality. This means sending each dataset to be checked by multiple labelers and then consolidating the annotations. Verification of labels. It's important to audit the labels to verify their accuracy and adjust them if necessary. Active learning.

Knowledge Distillation. Knowledge distillation is model ... Soft Targets or labels predicted from a model contain more information that binary hard labels due to the fact that they encode similarity measures between the classes. ... Machine Learning For ...

Understanding Dice Loss for Crisp Boundary Detection - Medium Therefore, the range of DSC is between 0 and 1, the larger the better. Thus we can use 1-DSC as Dice loss to maximize the overlap between two sets. In boundary detection tasks, the ground truth ...

Features and labels - Coursera Learn how to translate business problems into machine learning use cases and vet them for feasibility and impact. Find out how you can discover unexpected use cases, recognize the phases of an ML project and considerations within each, and gain confidence to propose a custom ML use case to your team or leadership or translate the requirements ...

Efficient Learning of Classification Models from Soft ... soft-label further refining its class label. One caveat of apply- ing this idea is that soft-labels based on human assessment are often noisy. To address this problem, we develop and test a new classification model learning algorithm that relies on soft-label binning to limit the effect of soft-label noise. We

Multi-Class Neural Networks: Softmax - Google Developers Multi-Class Neural Networks: Softmax. Estimated Time: 8 minutes. Recall that logistic regression produces a decimal between 0 and 1.0. For example, a logistic regression output of 0.8 from an email classifier suggests an 80% chance of an email being spam and a 20% chance of it being not spam. Clearly, the sum of the probabilities of an email ...

PDF Efficient Learning with Soft Label Information and ... Note that our learning from auxiliary soft labels approach is complementary to active learning: while the later aims to select the most informative examples, we aim to gain more useful information from those selected. This gives us an opportunity to combine these two 3 approaches. 1.2 LEARNING WITH MULTIPLE ANNOTATORS

Semi-Supervised Learning With Label Propagation Nodes in the graph then have label soft labels or label distribution based on the labels or label distributions of examples connected nearby in the graph. Many semi-supervised learning algorithms rely on the geometry of the data induced by both labeled and unlabeled examples to improve on supervised methods that use only the labeled data.

› lifestyleLifestyle | Daily Life | News - The Sydney Morning Herald The latest Lifestyle | Daily Life news, tips, opinion and advice from The Sydney Morning Herald covering life and relationships, beauty, fashion, health & wellbeing

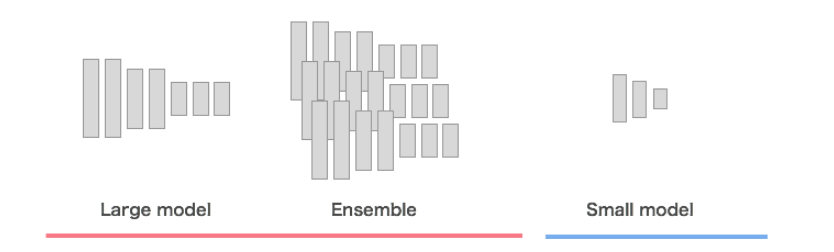

How to Develop Voting Ensembles With Python A soft voting ensemble involves summing the predicted probabilities for class labels and predicting the class label with the largest sum probability. In this tutorial, you will discover how to create voting ensembles for machine learning algorithms in Python. After completing this tutorial, you will know:

Regression - Features and Labels - Python Programming With supervised learning, you have features and labels. The features are the descriptive attributes, and the label is what you're attempting to predict or forecast. Another common example with regression might be to try to predict the dollar value of an insurance policy premium for someone.

› section › learningThe Learning Network - The New York Times May 02, 2003 · Teach and Learn With The Times: Resources for Bringing the World Into Your Classroom

Learning classification models with soft-label information Materials and methods: Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia.

The Ultimate Guide to Data Labeling for Machine Learning In machine learning, if you have labeled data, that means your data is marked up, or annotated, to show the target, which is the answer you want your machine learning model to predict. In general, data labeling can refer to tasks that include data tagging, annotation, classification, moderation, transcription, or processing.

35 A Label Always Turns Into An Instruction That Executes In The Generated Machine Code - Labels ...

An Introduction to Confident Learning: Finding and ... To solve your problem, just train a classifier (start out with logistic regression in sklearn if you aren't sure) to predict three possible labels, 0, 1, and -1. I recommend mapping the labels to 0, 1, 2. Then after training, when you predict, you can type classifier.predict_proba () and it will give you the probabilities for each class.

Learning Soft Labels via Meta Learning - Apple Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

![Reflections Of The Void: [Links of the Day] 25/02/2020 : Tensor flow deployment, Framework for ...](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhCV5HSLytGRnwIg6llTHMMBPHMapZaVdqpkWNDCVjk4PlD2KLtITtzMlxYAaqmxrP_DFiSZkzns461i07zvrz4e8vuFT7I3M8c6jfkSiCptoeV9Kua3bfhw3shIoURRW3NbxinOnmPIb3z/s1600/1_zNXeQ6mD5z5VD8coStye0w.gif)

Post a Comment for "43 soft labels machine learning"